1 Sentinel Data Quality Review and Characterization Distributed Programs

This chapter explains concepts underlying the Sentinel Data Quality Review and Characterization Distributed Programs which are shared across programs.

1.1 Distributed Programming

To evaluate data characteristics and overall quality, Sentinel Operations Center (SOC) has developed distributed code to query the content of the Sentinel Common Data Model (SCDM) formatted tables. The outputs from this process are meant to help determine whether the data conform to SCDM specifications, maintain integrity across variables and tables, and exhibit expected trends over time.

Both the format and the destination of the output generated by these programs will vary by the specific table being queried. These programs are designed to ensure that output and logs returned to Sentinel Operations Center for review is aggregate in form and does not included Data Partner sensitive information.

1.2 Technical Requirements

Sentinel requires that Data Partners meet or exceed the following minimum requirements:

| Item Description | Small (<100 GB ETL Size) | Medium (100–499 GB ETL Size) | Large (≥500 GB ETL Size) |

|---|---|---|---|

| SAS Version | Version 9.4 | Version 9.4 | Version 9.4 |

| OS Type | Workstation OS | Server OS | Server OS |

| Machine Type | Workstation | Server | Server or SAS Grid1 |

| Physical Cores per Sentinel Query | 1–4 | 1–4 | 1–4 |

| RAM (per core) | 8–16 GB | 8–16 GB | 8–16 GB |

| Storage Capacity (ETLs)2 | 3X size of latest approved ETL | 3X size of latest approved ETL | 3X size of latest approved ETL |

| Storage Capacity (QA Results) | 3X QA Results Size | 3X QA Results Size | 3X QA Results Size |

| IO Throughput (per core) | 150 MB/second | 150 MB/second | 150 MB/second |

1.3 Program Package Structure

Each distributed Program request package distributed by SOC is assigned a unique Request Identifier. SOC also uses a uniform folder structure to facilitate communications between SOC and Data Partners and to streamline file management. These are described below:

- dplocal

-

Contains output generated by the request that should remain with the DP (and may be used to facilitate follow-up queries).

- inputfiles

-

Contains all submodules, input data files, and lookup tables needed to execute a request. Input files contain information on what tables should be output and the type of analyses conducted on the variables in each table. Input files are created for each run of the QA program package by the SOC Quality Assurance team.

- msoc

-

Contains output generated by the local SAS program. This will be used by SOC for post-QA processing. All datasets, logfiles, and subfolders should be returned to SOC.

- sasprograms

-

Contains the master SAS program that must be modified by an SOC analyst with Data Partner specific information before program execution by the Data Partner.

1.4 Quality Checks

The QA Program Package and QA MIL Program Package quality checks are organized into three levels in order to assess pre-defined data quality measures and characteristics:

- Level 1 Quality Check

-

Level 1 data quality checks review the completeness and content of each variable in each table to ensure that the required variables contain data and conform to the formats specified by the SCDM specifications. For each SCDM variable, Level 1 data checks verify that data types, and variable lengths, are correct and reported values are acceptable. For example, ensuring that the variable

SEXin the Demographic table has a value ofF,M,A, orUis a Level 1 data check. Another example is ensuring that the variableMS_RESULT_Cin the Laboratory Result table is only populated with values ofPOSITIVE,NEGATIVE,BORDERLINE,UNDETERMINED, or a RANGE: start to end, with unit—e.g.,50\|100 MG/ML—for all laboratory tests. - Level 2 Quality Check

-

Level 2 data quality checks assess the logical relationship and integrity of data values within a variable or between two or more variables within and between tables. For example, the SCDM requires that the variable

ADMITTING_SOURCEin the Encounter table is populated only for inpatient, institutional, and emergency department encounters—i.e.,ENCTYPE=IP,IS, orED. A Level 2 data check would ensure thatADMITTING_SOURCEis populated only whenENCTYPEvalue isIP,IS, orED. - Level 3 Quality Check

-

Level 3 data quality checks examine data distributions and trends over time, both within a Data Partner’s database (by examining output by year and year/month) and across a Data Partner’s databases (by comparing updated SCDM tables to previous versions of the tables). For example, a Level 3 data check would ensure that there are no large, unexpected increases or decreases in diagnosis records over time.

1.5 RequestID

RequestID is a unique string which Sentinel uses for tracking distributed packages. It is a concatenation of the master program parameters ProjID, WPType, WPID, DPID, and VerID found in all of Sentinel’s distributed tools. These parameters represent the following:

- ProjID

-

Project identifier for internal SOC identification and tracking purposes.

- WPType

-

Work plan type for internal SOC identification and tracking—e.g.,

qarfor QA Request,qcrfor QA Rapid Covid Request, orqmrfor QA MIL Request. - WPID

-

Work plan identifier for SOC identification and tracking purposes. Follows the format

wpXXXwhereXXXis a three digit number that iterates with each Data Partner Refresh. - DPID

-

Data Partner identifier for SOC identification and tracking purposes. Is a unique 3-6 character long callsign uniquely identifying the Data Partner.

- VerID

-

Version identifier for SOC identification and tracking purposes. Follows the format

vXXwhereXXis a two digit number that iterates with each run of the QA Package within a given refresh.

The following example illustrates how the RequestID is constructed for the QA Package using the above parameters:

ProjID = soc;

WPType = qar;

WPID = wp001;

DPID = nsdp;

VerID = v01;The corresponding RequestID would be soc_qar_wp001_nsdp_v01. Please note that the root folder of the QA Package should be named identically to the RequestID when being run.

.

└── soc_qar_wp001_nsdp_v01

├── docs

├── dplocal

├── inputfiles

├── msoc

└── sasprograms1.6 Data Characteristics Sign-off Report

The Data Characteristics Sign-off Report is a summary report of all flags thrown by a given run of the QA Package and the QA MIL Package. The report is used by Data Partners to comment and sign-off on these flags and then returned to SOC. The distributed packages generate this report in the msoc folder, and it is named data_characteristics_sign_off_report_[dpid]_etl[#].xlsx where [dpid] is the Data Partner callsign and [#] is the ETL under review. For further details on the output of this report, see the Data Characteristics Sign-off Report specifications within Section @ref(#qar-msoc-folder).

In generating the Data Characteristics Sign-off Report, the QA Package calculates difference in the count of flags found in ETL under review and the previous ETL of the QA Package. This is accomplished in the QA Package by adding the previous ETL’s all_l1_l2_flags SAS dataset to the inputfiles directory of the QA Package prior to package execution, and in the QA MIL Package by adding the previous ETL’s all_l1_l2_flags and all_l3_flags SAS datasets to the inputfiles directory of the QA MIL Package prior to package execution.

1.7 Partitioned data

As datasets get larger—e.g., in excess of 20 years or more than 150 million PatIDs per Data Partner, data partners may decide to partition these large datasets into smaller datasets that can be queried in parallel to reduce querying run time.

1.7.1 Metadata and Parameters

For Data Partners that partition the data, the table names for partitioned tables will now be composed of a table prefix and a partition number—e.g., demographic1…demographic_N_, where N is the maximum partition number. The existing SAS macro variables for SCDM table variables will now either represent just the table-prefix (for a partitioned table) or the whole table name depending on whether the table is partitioned. Common Components will capture this additional metadata to assist querying of partitioned ETLs.

1.7.2 SCDM Partitioning Lookup Table

To facilitate efficient querying of partitioned data, Data Partners using partitioned data should create a crosswalk file that is specified in the _PARTABLE parameter containing two columns: PatID and PartitionID. Both should be numeric and use the smallest variable length required to store the maximum value in each. This table will contain one entry per PatID, with the corresponding PartitionID where that PatID is located. PatIDs should only ever be present in one partition.

1.7.3 Parallel Processing

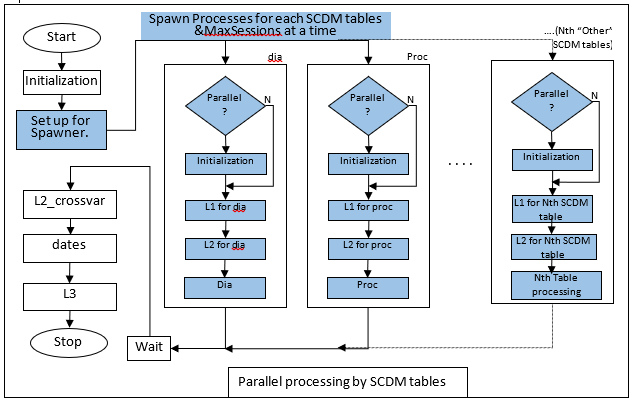

Sites with SAS/Connect may run the QA Package Partitioned Data version in parallel, using SAS Grid if available, or a single multi-core server. Parallelization is done by table, with a SAS session spawned for each table up to the number of sessions specified in _NumSessions. Within each session, the L1 checks and L2 intra-table checks are run for that given table.

Figure 1.1: Control Flow for Parallelized Processing

For large Data Partners to ensure adequate performance, it is highly recommended that SAS/CONNECT be installed on either a single server or be installed as part of a SAS Grid installation↩︎

Data Partners must reserve capacity to retain 3 ETLs at any given time: the ETL in production (e.g., ETL 2), the previous ETL (e.g., ETL 1), and an ETL in development (e.g., ETL 3).↩︎